Analyzing New Malware

In the ever-changing world of cybersecurity, new threats appear and evolve on a regular basis. Sharing information about them is an important part of fighting cybercrime and keeping people and organizations safe. To do so efficiently, being prepared will make the best use of your—and your team’s—time when analyzing an emerging threat.

In this blog, we cover various situations that researchers encounter when they need to publish their findings and provide some suggestions on how to approach them, along with a suggested workflow for approaching the analysis most efficiently. Finally, we apply this strategy to analyze a ransomware sample.

Challenges and Solutions

When a new threat emerges, there are a few common challenges that researchers face during analysis. Here are a few ways to handle them so you can produce clear and purposeful findings.

Urgency

In many cases, there is a relatively narrow window of time in which to release the publication, if we want the topic to be hot and the corresponding material to be relevant.

The solution is to focus on the most important questions that need answers.

- Who are the potential readers of the article? How will they benefit from reading it?

- How will the time costs associated with each section compare to its benefits?

Beginning your work by answering these questions will help shape the material in the right direction and manage time properly.

Novelty

For many attacks that hit the news, the related malware may not yet have been analyzed by other researchers. This increases the amount of work required to understand all parts of the relevant functionality, as there is little to no information to use as a starting point.

To address this issue, it is worth remembering that in many cases, modern malware families and attacker groups already have some roots. Tracking these connections allows researchers to find previous iterations of similar projects and reduce the amount of time required to understand malware’s functionality.

Complexity

The consequences of simple cyberattacks aren’t generally big enough to attract the attention of the public. What that means for researchers is that if something is worth writing an article about, it’s likely to be quite complex and therefore time-consuming to analyze.

The solution here might be to split the big task into smaller tasks. Apart from prioritizing based on the article’s focus, it also allows the analysis to done by a group, with different people focusing on different parts of functionality. Exchanging knowledge on a regular basis about what has already been covered will help the team to be efficient and not waste time analyzing the same parts multiple times.

Suggested Workflow

Here is a common workflow that should allow researchers to approach the analysis of new executable samples efficiently and effectively.

The second step, Behavioral Analysis, refers to the blackbox-style analysis that generally involves the execution of a sample under various monitoring tools and on sandboxes. The Dynamic Analysis step refers the use of a debugger to execute instructions.

Applying the Workflow to Malware Analysis

Let’s take a look at a DarkSide ransomware sample, which we analyzed earlier this year:

0a0c225f0e5ee941a79f2b7701f1285e4975a2859eb4d025d96d9e366e81abb9

Step 1: Triage

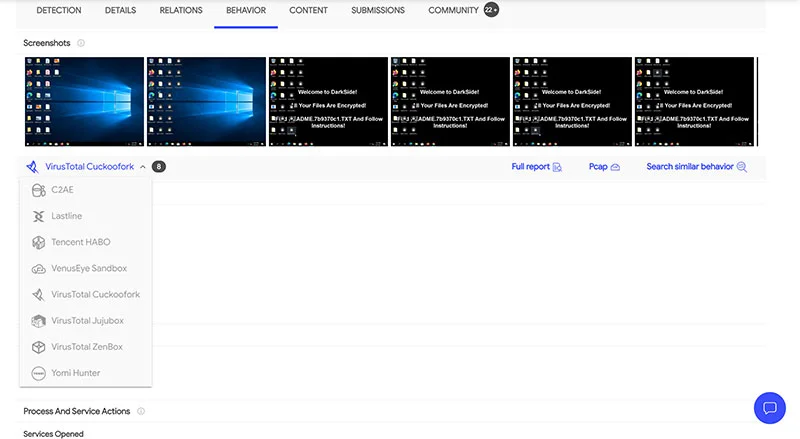

At the time of analysis, the sample had already been uploaded to Virustotal, so all cybersecurity community members could benefit from access and were able to see AV vendors’ detections as well as the sandbox logs in the Behavior tab. Note that there are now multiple sandboxes supported in Virustotal, so try a few to find a good report.

A quick look at the sample in the hex editor reveals that there is a high-entropy block at the end. There are multiple things it could be: the next stage payload or another module, a blob containing encrypted strings or configuration, etc. Static analysis will be required to understand it.

There are pretty much no meaningful strings and APIs:

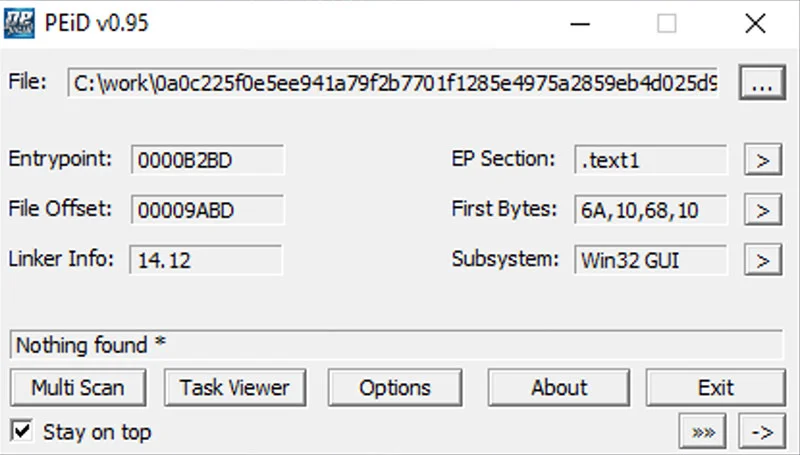

This is a strong indicator that the sample is obfuscated with APIs resolved dynamically and strings encrypted. Running a packer detection tool (PEiD with custom community signatures) confirms that there is no indication that public packers have been used in this case.

Step 2: Behavioral Analysis

By the time the analysis began, the sample had already been submitted to various public sandboxes by other community members, so lots of information could be taken from there.

Step 3: Unpacking

Checking cross-references to the high-entropy block in the disassembler, we can see that this doesn’t seem to be the next stage payload as there is no control transfer to it or related blocks. In addition, a quick look around the disassembly confirms that the sample is indeed obfuscated rather than packed with multiple APIs resolved dynamically by hashes and with strings encrypted.

Step 4: Static and Dynamic Analysis of the Actual Functionality

In order to be able to efficiently navigate the disassembly, we need to make APIs and strings easily readable.

For APIs, this is very easy to achieve with dynamic analysis as all the APIs are resolved in a single function. Therefore, letting it execute until the end will give us all the APIs’ addresses. To propagate their names to the pointers, use standard renimp.idc script shipped as part of IDA Pro.

This approach won’t work for strings, as they’re decrypted on an ad-hoc basis just before being used, rather than in a single place. Therefore, to make them easily visible, scripting will be required. In our blog on Darkside, we have already provided such a script that will attempt to find all the encrypted strings and decrypt them.

That’s it. Now when both strings and APIs are visible, the only thing left to engineer is to carefully go through cross references and keep the markup for the corresponding functions describing all potentially interesting information (subject to the target audience) in the article.

Conclusion

Knowledge sharing is an important part of the cybersecurity field that allows us to quickly adapt to new threats and minimize their associated risks. By properly focusing our efforts, we can improve the quality of this process and make the world a safer place.

Extra Tips

- Know your audience – the content of the technical blog post (and the corresponding questions to answer) will be very different from a news article for the general public

- Consider teamwork to speed up the process – Asking for help if at an early stage helps increase the total time available for the analysis

- Have your templates ready – simple scripts to decrypt / decode / decompress the data may help avoid unnecessary delays